Even the most highly trained and experienced person sometimes needs a hand. For astronauts aboard the International Space Station, that helping hand comes from other crew members, experts on the ground, and increasingly, in the form of augmented reality (AR) and virtual reality (VR).

The first use of AR on station, a set of high-tech goggles called Sidekick, provided hands-free assistance to crew members using high-definition holograms that show 3D schematics or diagrams of physical objects as they completed tasks. It included video teleconference capability to provide the crew with direct support from flight control, payload developers, or other experts.

The ways in which crew members use these tools continues to expand in frequency and scope. Here are nine ways we use AR and VR for research aboard the space station:

VR control of robots

Pilote, an investigation from ESA (European Space Agency) and France’s National Center for Space Studies (CNES), tests remote operation of robotic arms and space vehicles using VR with interfaces based on haptics, or simulated touch and motion. Results could help optimize the ergonomics of workstations on the space station and future spacecraft for missions to the Moon and Mars.

Pilote compares existing and new technologies, including those recently developed for teleoperation and others used to pilot the Canadarm2 and Soyuz spacecraft. The investigation also compares astronaut performance on the ground and during long-duration space missions. Designs from Earth-based testing use ergonomic principles that do not fit microgravity conditions, which is why testing is performed in space.

Pedaling through space

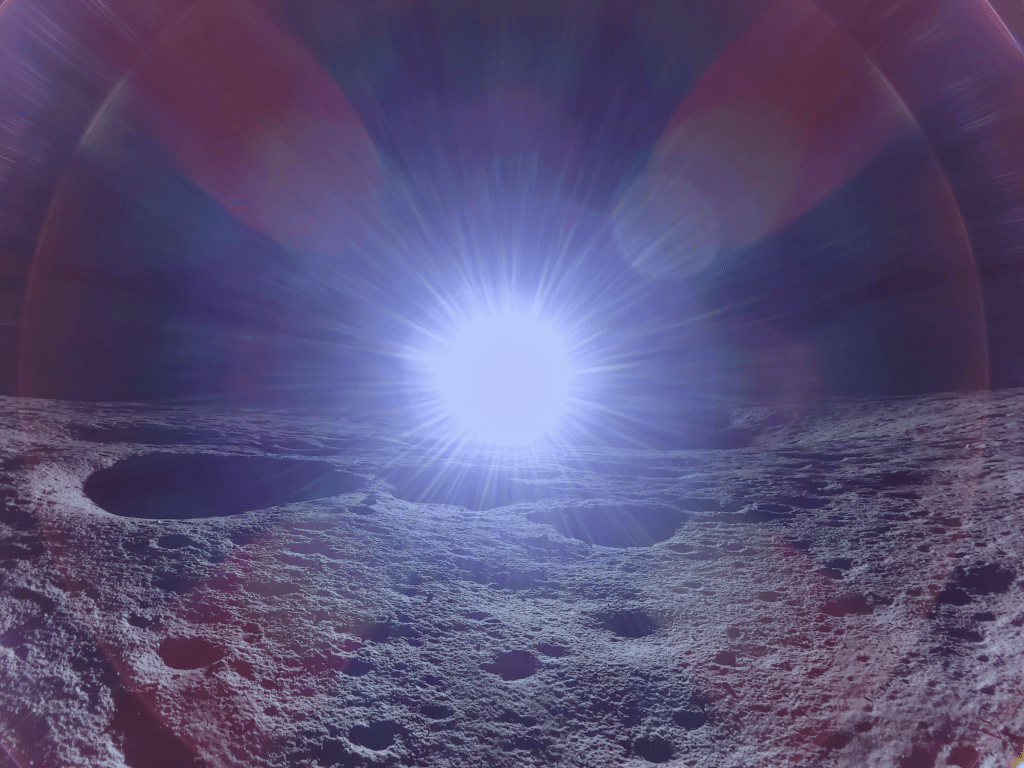

Immersive Exercise tests whether a VR environment for the station’s exercise bicycle, CEVIS, increases motivation to exercise and provides astronauts a better experience for their daily training sessions. If crew members enjoy the VR experience, a headset and associated environments (cycling around a lunar crater, anyone?) could become a permanent fixture for exercise sessions. On Earth, this type of VR could benefit people in confined or isolated places.

AR maintenance assists

T2 AR tests using AR to help crew members inspect and maintain the space station’s T2 Treadmill. Astronaut Soichi Noguchi of the Japan Aerospace Exploration Agency (JAXA) kicked off the first of a series of tests in April. On future space missions, crew members need to be ready perform this type of task without assistance from the ground due to significant time delays in communications. AR guidance on complex spacecraft maintenance and repair activities also reduces the time astronauts spend training for and completing such tasks. Acting as a smart assistant, AR applications run on tablets or headsets, interpreting what the camera sees and what a crew member does and suggesting the next step to perform. Crew members can operate the applications by speaking or gesturing as well.

A cool upgrade

NASA’s Cold Atom Lab (CAL) is the first quantum science laboratory in Earth orbit, hosting experiments that explore the fundamental behaviors and properties of atoms. About the size of a minifridge, it was designed to enable in-flight hardware upgrades. In July, the Cold Atom Lab team successfully demonstrated using an AR headset to assist astronauts with upgrade activities.

Astronaut Megan McArthur donned a Microsoft HoloLens while she removed a piece of hardware from inside CAL and replaced it with a new one. Through the HoloLens, McArthur could easily see the U.S. Destiny module around her. A small front-facing camera on the headset allowed Cold Atom Lab team members to see what she was seeing, whereas normally they would rely on a camera positioned behind or above the astronaut to provide an often-obscured view of the CAL instrument. The CAL team also could add virtual graphics, such as text or drawings, to McArthur’s field of view. For example, as she looked at a large cable harness, the team could add an arrow in her field of view designating a particular cable to unplug or a zip tie to cut.

Time travel

Astronauts need to accurately perceive time and speed of objects in their environment in order to reliably perform tasks. Research shows that our perceptions of time and space overlap, and the speed of the body’s movement may affect time perception. Other factors of spaceflight that can affect time perception include disrupted sleep and circadian rhythms and stress.

Time Perception examines changes in how humans perceive time during and after long-duration exposure to microgravity. Crew members wear a head-mounted VR display, listen to instructions, and use a finger trackball connected to a laptop to respond. They take tests once a month during flight, as well as before launching to space and after returning to Earth, to evaluate adaptive changes.

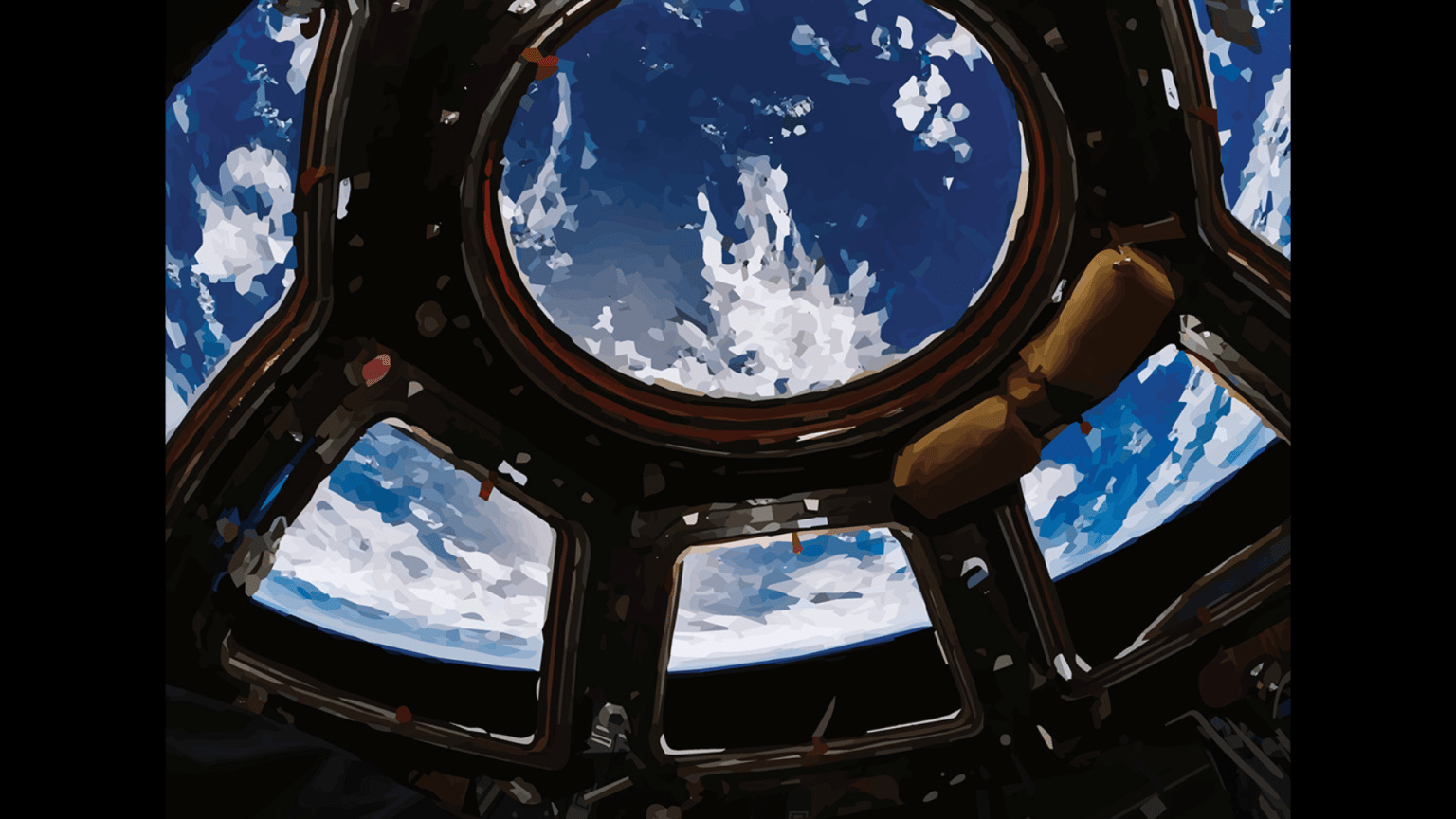

Just like being there

The ISS Experience is an immersive VR series filmed over multiple months to document different crew activities, from science conducted aboard the station to a spacewalk. The series uses special 360 cameras designed to operate in space to transport audiences to low-Earth orbit and make viewers feel like astronauts on a mission. It also gives audiences on Earth a better sense of the challenges of adaptation to life in space, the work and science, and the human interaction between astronauts. It could spark ideas for research or programs to improve conditions for crew members on future missions and inspire future microgravity research that benefits people on Earth.

Get a better grip

The way humans grip and manipulate an object evolved in the presence of gravity. Microgravity changes the cues we use to control these activities. An ESA investigation, GRIP, studies how spaceflight affects gripping and manipulation of objects. Results could identify potential challenges astronauts may face when they move between environments with different levels of gravity, such as going from the microgravity of a lengthy voyage in space to the surface of Mars, where gravity is about one-third that on Earth. The study also could contribute to the design and control of touch-based interfaces such as remote control on future space exploration.

Controlling movement in microgravity

To control the movement and position of our bodies and evaluate the distance between our bodies and other things, humans combine what we see, feel, and hear with information from the inner ear or vestibular system. VECTION looks at how changes in gravity affect these abilities in astronauts and how to address any issues that result. Data collection at multiple time points during flight on the space station and after return to Earth allows researchers to investigate how astronauts adapt to and recover from these effects. This investigation also could help drivers, pilots, and robotic manipulators control vehicles in low-gravity environments.

An astronaut’s reach should not exceed their grasp

When a person reaches out to grab an object, their brain coordinates hand movement with information from senses such as sight and hearing. The ESA’s GRASP investigation seeks to better understand the role that perception of gravity plays in this action by observing astronauts using a VR headset to reach for virtual objects. To live in space, astronauts must adapt physically to microgravity, and their brains must adapt to the absence of traditional up and down sensations. By providing insight into this adaptation, GRASP helps support future long-term space exploration.